People with amyotrophic lateral sclerosis (ALS) suffer from a gradual decline in their ability to control their muscles. As a result, they often lose the ability to speak, making it difficult to communicate with others.

A team of MIT researchers has now designed a stretchable, skin-like device that can be attached to a patient's face and can measure small movements such as a twitch or a smile.

The researchers hope that their new device would allow patients to communicate in a more natural way, without having to deal with bulky equipment.

"Not only are our devices malleable, soft, disposable, and light, they're also visually invisible," says Canan Dagdeviren, the LG Electronics Career Development Assistant Professor of Media Arts and Sciences at MIT and the leader of the research team. "You can camouflage it and nobody would think that you have something on your skin."

ALS patients use similar devices that measure the electrical activity of the nerves that control the facial muscles. However, this approach also requires cumbersome equipment, and it is not always accurate.

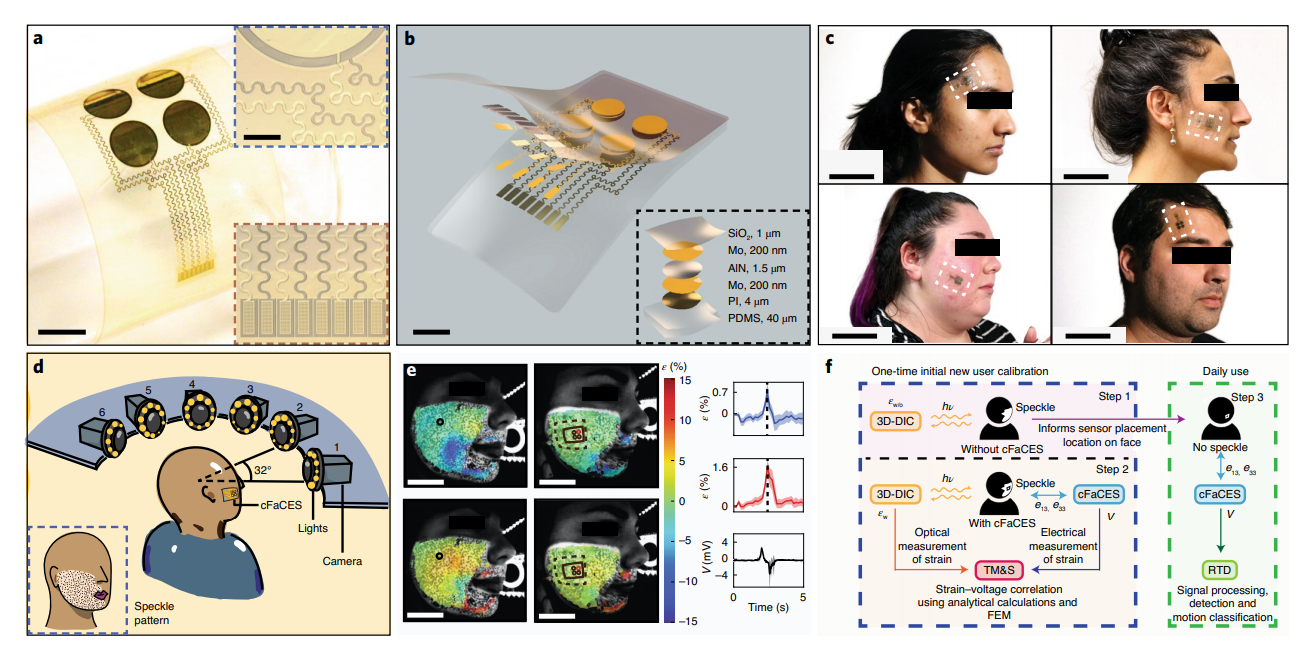

The device they created consists of four piezoelectric sensors embedded in a thin silicone film. The sensors, which are made of aluminum nitride, can detect mechanical deformation of the skin and convert it into an electric voltage that can be easily measured. All of these components are easy to mass-produce, so the researchers estimate that each device would cost around $10.

The researchers used a process called digital imaging correlation on healthy volunteers to help them select the most useful locations to place the sensor. They painted a random black-and-white speckle pattern on the face and then took many images of the area with multiple cameras as the subjects performed facial motions such as smiling, twitching the cheek, or mouthing the shape of certain letters. The images were processed by software that analyzes how the small dots move in relation to each other, to determine the amount of strain experienced in a single area. The researchers also used the measurements of skin deformations to train a machine-learning algorithm to distinguish between a smile, open mouth, and pursed lips. Using this algorithm, they tested the devices with two ALS patients, and were able to achieve about 75 percent accuracy in distinguishing between these different movements. The accuracy rate in healthy subjects was 87 percent.

reference

Nature Biomedical Engineering (2020). DOI: 10.1038/s41551-020-00612-w

'AI & Robotics' 카테고리의 다른 글

| using ML to investigate cellular mechanism in infected cell (0) | 2020.09.11 |

|---|---|

| using robot to automate the COVID detection process (0) | 2020.09.11 |

| 70 micrometer size mass producible robot (0) | 2020.08.29 |

| ML로 blood quality test 하기 (0) | 2020.08.25 |

| deep learning이 잘 돌아가는 이유 (0) | 2020.08.03 |